About

Video Understanding

Video Understanding at the Science Hub is teams of MIT and Amazon researchers working together to solve audio and video challenges with machine learning. The teams work on solving broad challenges like the automation of error detection in video streaming and the creation of adaptive machine learning algorithms for streaming. By solving these challenges, researchers ensure that people can enjoy seamless video content.

Combining Virtual and Real Imagery through Deep Inverse Rendering

We seek to combine real video imagery with synthetic imagery, which has critical applications in visual effects, product placement, and augmented reality. Examples include the seamless insertion of additional objects in video frames, the replacement of an object by another one, the editing of materials, etc. Traditional methods to achieve seamless results have relied on heavy pipelines and tedious work by visual effect artists (match move, light probe, rotoscoping, 3D modeling, etc.). In this project, we seek to dramatically simplify the process and even to fully automate it.

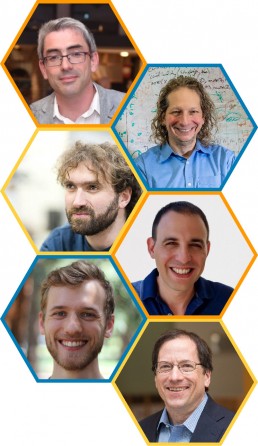

This work is a collaboration between MIT faculty Fredo Durand and Amazon researchers Ahmed Saad, Maxim Arap and Mohamed Omar. Funded by Amazon Prime Video.

Cross-Modal Representation Learning in Videos

In this work, we propose to develop a novel framework based on cross-modal contrastive pretraining to efficiently learn a unified model for zero-shot video, audio and text understanding. In particular, we will continue to develop a progressive self-distillation method, which has shown impressive gains in efficiency and robustness of image-based vision-language models, in the context of video understanding. A model that effectively learns to align corresponding data across these three input modalities constitutes a “swiss-army knife” of discriminative perceptual classifiers with potential to emerge as the “foundation” model backbone powering downstream approaches to video analytics, scene detection and content moderation for compliance with regulatory needs.

This work is a collaboration between MIT researcher Aude Oliva and graduate student Alex Andonian, and Amazon researchers Raffay Hamid, Natalie Strobach, Mohamed Omar, Maxim Arap and Ahmed Saad. Funded by Amazon Prime Video.

Deep Inverse Rendering for Performance Replay

We seek to infer photorealistic 3D models from video inputs, particularly in the case of scenes that include humans. Traditional methods to achieve seamless results have relied on heavy pipelines and tedious work by visual effect artists (match move, motion capture, light probe, rotoscoping, 3D modeling, etc.). In this project, we seek to dramatically simplify the process and even to fully automate it. Potential applications of the type of techniques we will develop include videoconferencing and sports virtual replay.

This work is a collaboration between MIT faculty Fredo Durand and Amazon researchers Yochai Zvik and Michael Chertok. Funded by Amazon Prime Video.

Improving Video Streaming Protocols with Counterfactual Trace-Driven Simulation

Video streaming quality of experience (QoE) depends crucially on adaptive bitrate (ABR) algorithms and transport protocols. In recent years there has been significant interest in using machine learning to optimize such protocols for specific networks. Although promising, these ML approaches have some important drawbacks. First, most techniques require a considerable amount of training data, and since the benefits of better algorithms become evident mainly in the “tail” under poor network conditions, it is challenging to collect adequate training data and evaluate the performance of learned algorithms in practice. Second, the performance benefits of learned algorithms often come at the expense of generality; if network conditions fall outside the training regime, learned policies can err in unanticipated ways. In this project, we seek to tackle these challenges through the following advances: (1) High-fidelity data-driven simulation of video delivery protocols, (2) Robust policy optimization with causal modeling.

This work is a collaboration between MIT faculty Pouya Hamadanian, Arash Nasr-Esfahany, Mohammad Alizadeh, Devavrat Shah and Amazon researcher Zahaib Akhtar. Funded by Amazon Prime Video.

Reconstructing 3D Video Reconstruction from 2D video

A long-standing problem in computer vision is to reconstruct a full 3D model of a scene given only sparsely sampled 2D observations. This problem remains unsolved for dynamic, moving scenes, like sports TV streaming, where multiple camera angles of fast-developing events are available. Based on recent developments in neural rendering, we will construct a model that takes as input a set of 2D videos, and reconstructs a 3D representation in the form of a neural radiance field. By solving for the 3D scene flow across time, we explicitly represent the dynamic scene elements throughout the video. This type of model will allow us to reconstruct the underlying 3D scene, render it from novel camera perspectives, and edit the scene to consider alternative developments.

This work is a collaboration between MIT faculty Vincent Sitzman, Fredo Durand, William Freeman and Joshua Tenenbaum and Amazon researchers Yochai Zvik and Michael Chertok. Funded by Amazon Prime Video.

Segmenting objects in the long tail

Removing, replacing, or adding objects within a video is a challenging task, requiring estimation of imaging parameters, scene layout, and object identification and segmentation. In this project, given an input video, we aim to identify, segment, and track each of the different objects depicted in a given scene. A main challenge is that we can’t anticipate all the objects to be encountered in videos. Learning by processing many images, our new unsupervised object segmentation algorithm addresses the long tail of object identities by observing many examples from the target distribution. We propose to extend this method to video by imposing temporal consistency and motion tracking. The resulting system should address many of the current hurdles in creating object-centered representations of videos, a crucial step in video advertising.

This work is a collaboration between MIT faculty William Freeman and Amazon researchers Ahmed Saad, Maxim Arap and Mohamed Omar. Funded by Amazon Prime Video.